Wellcome to Open Reading Group's library,

You can find here all papers liked or uploaded by Open Reading Grouptogether with brief user bio and description of her/his academic activity.

### Upcoming readings:

No upcoming readings for now...

### Past Readings:

- 21/05/2018 [Graph Embedding Techniques, Applications, and Performance: A Survey](https://papers-gamma.link/paper/52)

- 14/05/2018 [A Fast and Provable Method for Estimating Clique Counts Using Turán’s Theorem](https://papers-gamma.link/paper/24)

- 07/05/2018 [VERSE: Versatile Graph Embeddings from Similarity Measures](https://papers-gamma.link/paper/48)

- 30/04/2018 [Hierarchical Clustering Beyond the Worst-Case](https://papers-gamma.link/paper/45)

- 16/04/2018 [Scalable Motif-aware Graph Clustering](https://papers-gamma.link/paper/18)

- 02/04/2018 [Practical Algorithms for Linear Boolean-width](https://papers-gamma.link/paper/40)

- 26/03/2018 [New Perspectives and Methods in Link Prediction](https://papers-gamma.link/paper/28/New%20Perspectives%20and%20Methods%20in%20Link%20Prediction)

- 19/03/2018 [In-Core Computation of Geometric Centralities with HyperBall: A Hundred Billion Nodes and Beyond](https://papers-gamma.link/paper/37)

- 12/03/2018 [Diversity is All You Need: Learning Skills without a Reward Function](https://papers-gamma.link/paper/36)

- 05/03/2018 [When Hashes Met Wedges: A Distributed Algorithm for Finding High Similarity Vectors](https://papers-gamma.link/paper/23)

- 26/02/2018 [Fast Approximation of Centrality](https://papers-gamma.link/paper/35/Fast%20Approximation%20of%20Centrality)

- 19/02/2018 [Indexing Public-Private Graphs](https://papers-gamma.link/paper/19/Indexing%20Public-Private%20Graphs)

- 12/02/2018 [On the uniform generation of random graphs with prescribed degree sequences](https://papers-gamma.link/paper/26/On%20the%20uniform%20generation%20of%20random%20graphs%20with%20prescribed%20d%20egree%20sequences)

- 05/02/2018 [Linear Additive Markov Processes](https://papers-gamma.link/paper/21/Linear%20Additive%20Markov%20Processes)

- 29/01/2018 [ESCAPE: Efficiently Counting All 5-Vertex Subgraphs](https://papers-gamma.link/paper/17/ESCAPE:%20Efficiently%20Counting%20All%205-Vertex%20Subgraphs)

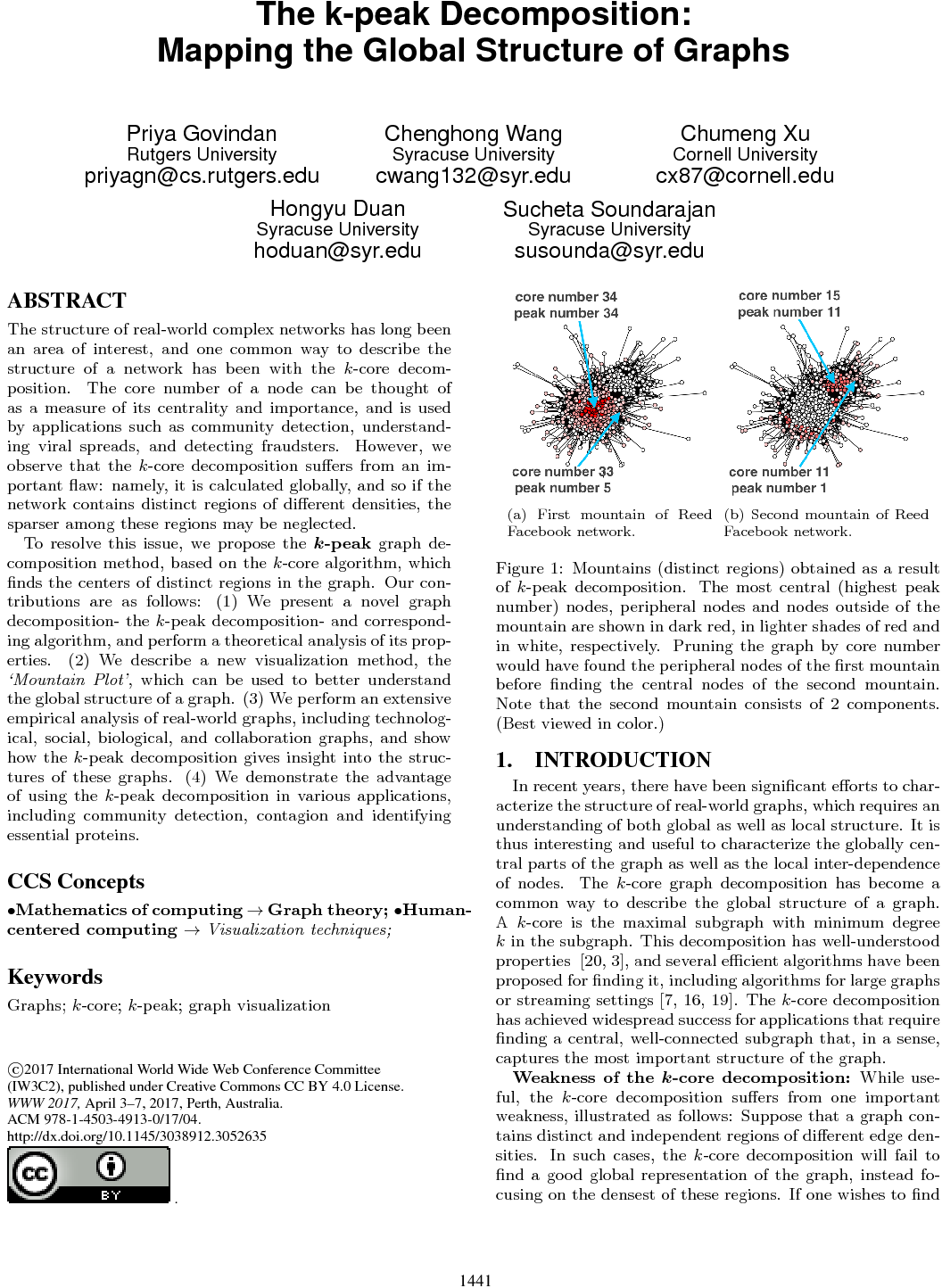

- 22/01/2018 [The k-peak Decomposition: Mapping the Global Structure of Graphs](https://papers-gamma.link/paper/16/The%20k-peak%20Decomposition:%20Mapping%20the%20Global%20Structure%20of%20Graphs)

☆

1

Comments:

### General:

A very simple and very scalable algorithm with theoretical guarantees to compute an approximation of the closeness centrality for each node in the graph.

Maybe the framework can be adapted to solve other problems. In particular, "Hoeffding's theorem" is very handy.

### Parallelism:

Note that the algorithm is embarrassingly parallel (the loop over the $k$ reference nodes can be done in parallel with almost no additional work).

### Experiments:

It would be nice to have some experiments on large real-world graphs.

An efficient (and parallel) implementation of the algorithm is available here: https://github.com/maxdan94/BFS

### Related work:

More recent related work by Paolo Boldi and Sebastiano Vigna:

- http://mmds-data.org/presentations/2016/s-vigna.pdf

- https://arxiv.org/abs/1011.5599

- https://papers-gamma.link/paper/37

It also gives an approximation of the centrality for each node in the graph and it also scales to huge graphs. Which algorithm is "the best"?

☆

2

Comments:

Just a few remarks from me,

### Definition of mountain

Why is the k-peak in the k-mountain ? Why is the core number of a k-peak changed when removing the k+1 peak ?

###More informations on k-mountains

Showing a k-mountain on figure 2 or on another toy exemple would have been nice.

Besides, more theoretical insights on k-mountains are missing : complexity in time and space of the algorithm used to find the k-mountains ?

How many k-mountains include a given node ?

Less than the degeneracy of the graph but maybe it's possible to say more.

Regarding the complexity of the algorithm used to build the k-mountains, I'm Not sure I understand everything but when the k-peak decomposition is done, for each k-contour, a new k-core decomposition must be run on the subgraph obtained when removing the k-contour. Then it must be compared whith the k-core decomposition of the whole graph. All these operations take time so the complexity of the algorithm used to build the k-mountains is I think higher than the complexity of the k-peak decomposition.

Besides multiple choices are made for building the k-mountains, it seems to me that this tool deserves a broader analysis.

Same thing for the mountains shapes, some insights are missing, for instance mountains may have an obvious staircase shape, does it mean something ?

###6.2 _p_

I don't get really get what is p?

###7.2 is unclear

but it may be related to my ignorance of what is a protein.

### Lemma 2 (bis):

Lemma 2 (bis): Algorithm 1 requires $O(\delta\cdot(N+M))$ time, where $\delta$ is the degeneracy of the input graph.

Proof: $k_1=\delta$ and the $k_i$ values must be unique non-negative integers, there are thus $\delta$ distinct $k_i$ values or less. Thus, Algorithm 1 enters the while loop $\delta$ times or less leading to the stated running time.

We thus have a running time in $O(\min(\sqrt{N},\delta)\cdot (M+N))$.

Note that $\delta \leq \sqrt{M}$ and $\delta<N$ and in practice (in large sparse real-world graphs) it seems that $\delta\lessapprox \sqrt{N}$.

### k-core decomposition definition:

The (full) definition of the k-core decomposition may come a bit late. Having an informal definition of the k-core decomposition (not just a definition of k-core) at the beginning of the introduction may help a reader not familiar with it.

### Scatter plots:

Scatter plots: "k-core value VS k-peak value" for each node in the graph are not shown. This may be interesting.

Note that scatter plots: "k-core value VS degree" are shown in "Kijung, Eliassi-Rad and Faloutsos. CoreScope: Graph Mining Using k-Core Analysis. ICDM2016" leading to interesting insights on graphs. Something similar could be done with k-peak.

###Experiments on large graphs:

As the algorithm is very scalable: nearly linear time in practice (on large sparse real-world graphs) and linear memory. Experiments on larger graphs e.g. 1G edges could be done.

### Implementation:

Even though the algorithm is very easy to implement, a link to a publicly available implementation would make the framework easier to use and easier to improve/extend.

### Link to the densest subgraph:

The $\delta$-core (with $\delta$ the degeneracy of the graph) is a 2-approximation of the densest subgraph (here the density is defined as the average degree divided by 2) and thus the core decomposition can be seen as a (2-)approximation of the density friendly decomposition.

- "Density-friendly graph decomposition". Tatti and Gionis. WWW2015.

- "Large Scale Density-friendly Graph Decomposition via Convex Programming". Danisch et al. WWW2017.

Having this in mind, the k-peak decomposition can be seen as an approximation of the following decomposition:

- 1 Find the densest subgraph.

- 2 Remove it from the graph (along with all edges connected to it).

- 3 Go to 1 till the graph is empty.

### Faster algorithms:

Another appealing feature of the k-core decomposition is that it is used to make faster algorithm. For instance, it is used in https://arxiv.org/abs/1006.5440 and to https://arxiv.org/abs/1103.0318 list all maximal cliques efficiently in sparse real-world graphs.

Can the k-peak decomposition be used in a similar way to make some algorithms faster?

### Section 6.2 not very clear.

### Typos/Minors:

- "one can view the the k-peak decomposition"

- "et. al", "et al"

- "network[23]" (many of these)

- "properties similar to k-core ."

Comments: